By Ethan Phillips, Business Development Consultant at GradeMaker

In 2021, the global English Proficiency Test Market was valued at $2.7 Billion USD, and is expected to reach $15.26 Billion by 2030. Language assessment is becoming an increasingly competitive space, forcing organisations to find new ways to gain market advantage over competitors.

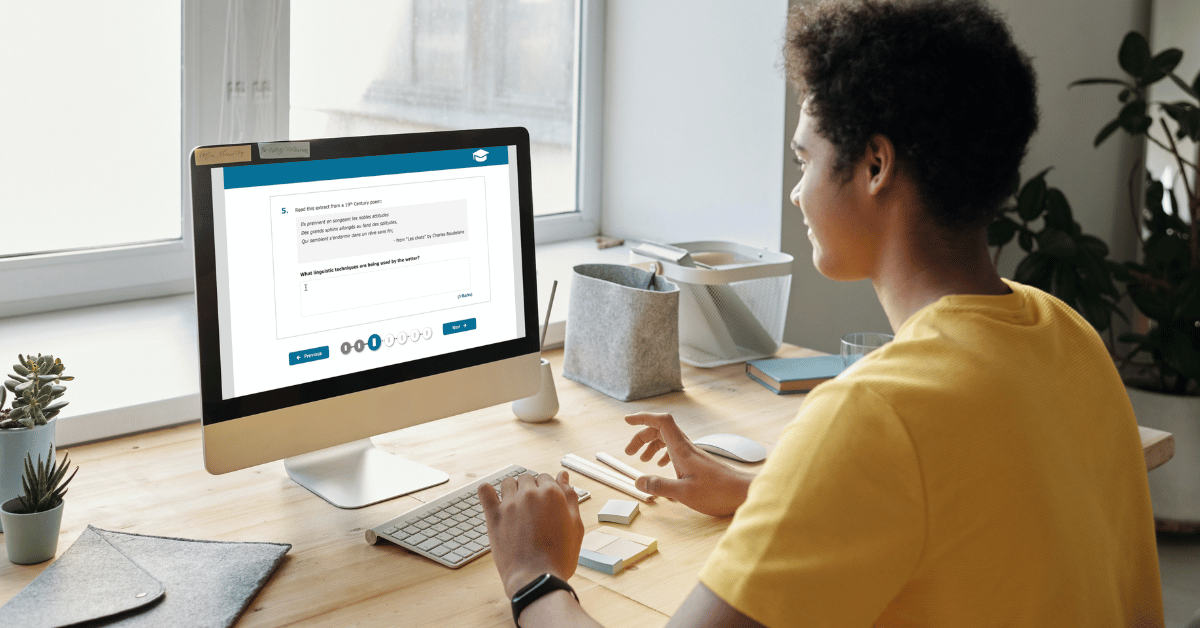

One of the areas Language Assessors are finding potential efficiency gains, is by using new digital technologies. Digital technology has revolutionized the way language tests are created, enabling test providers to offer more accurate, efficient, and accessible assessments.

However, organisations who have focused entirely on shifting the delivery of their assessments have come unstuck when the authoring side of their process has not been able to keep up.

Advances in Digital Assessment

Here we will look at three of the most significant digital advances in testing, and how the ‘authoring first’ approach makes organisations more competitive in the long-term:

LOFT

A popular emerging digital advancement is dynamic randomization of items in tests, also known as ‘Linear on the Fly’ or ‘LOFT’.

In a traditional ‘fixed’ test, all candidates receive the same questions in the same order. However, in the LOFT approach, candidates are given a random selection of items drawn from a larger collection of items.

There are many benefits to this approach. As all candidates are presented with different questions there is less chance of candidates copying from one another. This method can also reduce the likelihood of exam content being leaked before the test.

If the authoring process is sufficiently modern, authors can write individual items and submit them to an item bank, ensuring that no contributors can guess what will feature in any test.

This also makes it easier to reuse items from previous tests, while reducing the risk of candidates recognising any given question.

The most common barrier for language assessment providers when looking to move to a LOFT approach is the need for a dynamic and flexible item bank.

For randomised testing to be a valid and reliable option, each question must have accurate metadata associated with it, which should allow the test delivery system to pull items as required and return accurate results. This means that each question must be tagged to relevant parts of the syllabus and assessment objectives. Managing all this can come with a heavy administrative burden, if testers are to ensure that every question, answer, syllabus and mark scheme is correctly and consistently stored.

With GradeMaker Pro’s advanced item banking features, authoring for dynamically randomized tests becomes scalable. Questions can be authored directly into the item bank with every bit of metadata safely stored for easy filtering and analysis.

Adaptive Testing

Adaptive testing (Commonly known as ‘CAT – Computerized Adaptive Testing’) is a popular option for testers wanting to measure each candidate’s ability with more precision.

This involves modifying the assessment in real time, so that each candidate receives a personalized test that ends when the candidate’s maximum performance level can be identified. As a result, candidate ability can be accurately measured in half the time of a linear test.

If you are looking to offer adaptive testing, start by looking at the total number of questions in your bank. For your adaptive engine to run successfully, you will need at least 10 times more items than you would for developing linear tests.

Often language assessors find that the sheer scale of item production presents a few challenges. The difficulty of each question needs to be carefully evaluated so that the grade given to candidates is a fair reflection of their ability.

This is especially important in adaptive testing as a badly judged question could lead to inappropriate questions following on, thereby giving individuals an unfair testing experience. Each question therefore needs to go through a stringent review process, where pre-testing and anchor items are easily utilized.

The increased need for items, together with the additional quality assurance needed, raises the issue of question production efficiency. Having to create many more items than normal takes time and it is important to look for efficiency gains, to reduce the total time and money spent authoring.

At GradeMaker we have seen the enormous benefits of using a custom workflow system for passing papers between reviewers and approvers automatically. The GradeMaker Pro platform has already helped multiple assessment creators to streamline their authoring processes and create the large item banks needed for adaptive testing.

Auto Paper Construction (APC)

Another new approach in the world of language testing is the automatic creation of papers, using parameters to form paper composition rules.

These pre-set filters pull items from the bank, enabling teams to automatically construct any number of highly tailored exam papers.

Like LOFT and adaptive testing, APC relies on having a large bank of approved items ready to go.

Without a big enough bank, you may not have the items available to populate a paper that meets your predefined rules.

GradeMaker Pro’s APC feature allows test assemblers to use a granular item searches, create complex rules in which items are presented, format paper sections, and save as a template to create any number of individual papers. Once generated, each paper passes through a final review and approval workflow to ensure it is ready to publish.

What's next?

If you are looking at bringing your language assessments into the digital age, GradeMaker gives you a complete, centralised authoring platform that also improves the efficiency of your exam development process. If you would like to look at how GradeMaker works for language testers, please see a short 5-minute video here:

Our Customers