Quality will always be the primary consideration when developing high-stakes exams.

So at a time of transition from paper to online test delivery, a key challenge will be in ensuring your quality assurance process remains robust as you introduce new workflows, new item types, and new ways of trialling tests.

It’s an ideal time to consider how technology can support quality assurance.

Maybe there are aspects of the QA process that can be automated, to ensure issues are flagged to the right people at the right time. Or perhaps there are other parts of the production process that can be automated to free up time for more rigorous quality checking.

In this post we explore five areas of exams authoring in which quality control can be enhanced using technology.

Adopt a single source of authoring

If you are transitioning to onscreen exams, it’s likely you’ll be running two separate development processes simultaneously — one for paper, one for onscreen delivery.

A common approach is to develop paper tests using standard office applications, and onscreen tests using the test player’s authoring tool.

One of the challenges of this approach is that your quality control processes will be limited to the capabilities of the available tools. As a result, an error may creep into an online test that would normally be picked up for a paper test, or vice versa.

One alternative is to develop all your tests in a shared system and then transfer approved exam content into your test player for onscreen delivery. But additional QA steps would be required to ensure errors are not introduced during transferal. It’s also hard to check the appropriateness of new CBT item types if evaluators can’t see exactly how they will appear to candidates when items are at review stage.

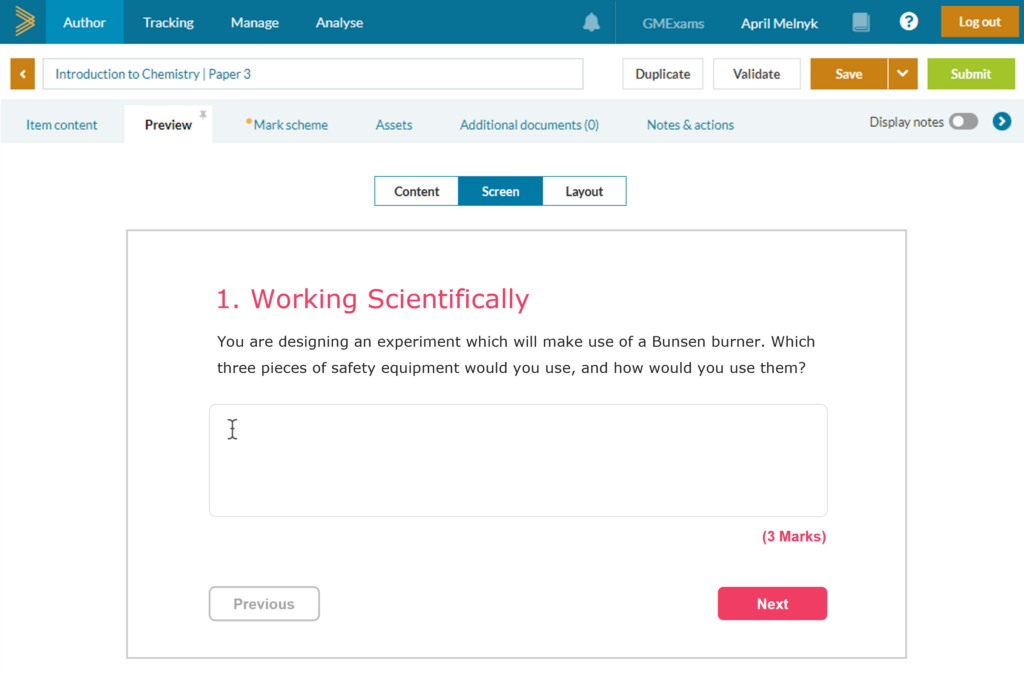

Our advice would be to use ‘platform agnostic’ authoring software to develop your exam content. The software you choose should allow you to preview how items and tests will look to candidates in any media as part of your quality control process. It should also allow for the automatic export of test content to your chosen test player for scheduling and delivery, while also offering a good print paper export solution.

In GradeMaker reviewers and approvers can preview how questions will appear to candidates in paper or onscreen format. Here, a preview is provided for a test destined for the Trifork online test delivery system. When the test is ready it can be sent to Trifork as a QTI file at the press of a button.

Move to item banking

Item banking has a number of efficiency and security benefits which we talk about here. It is a necessary tool if you are moving to adaptive or on-demand testing.

But item banking can also improve test quality, by allowing teams to attribute up-to-date statistical data to question items, such as IRT difficulty and discrimination values. This data can be used for setting standards by assigning anchor items to tests.

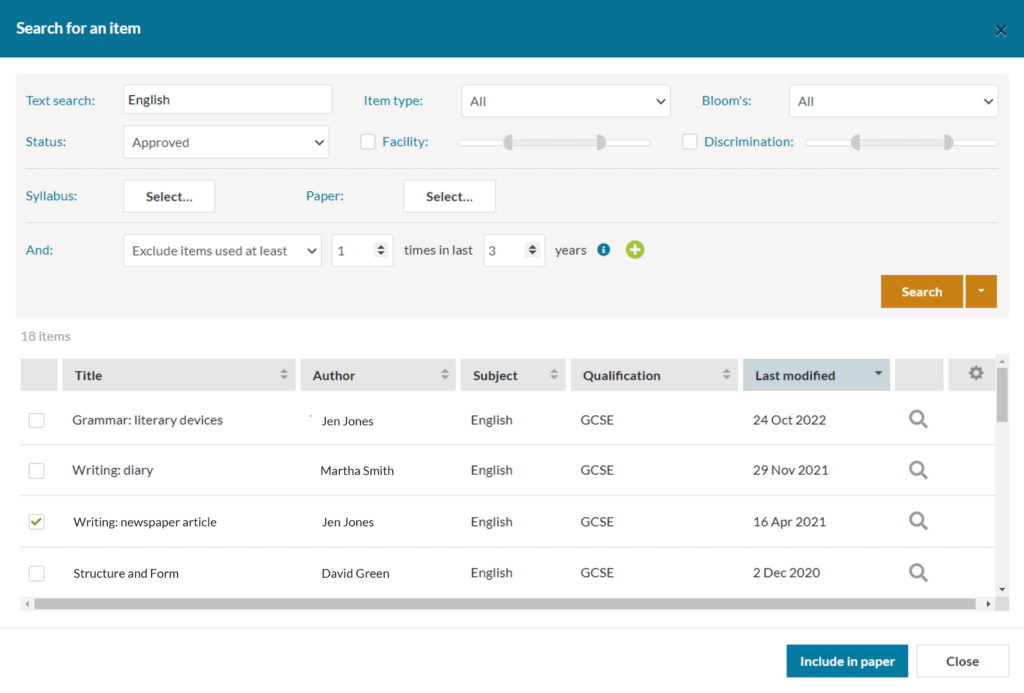

Item banking also allows for richer metadata tagging on questions. This can assist in the construction of comparable tests in which items measure a range of abilities.

Item metadata also allows for granular searches of the item bank, aiding the development of broad and balanced tests.

And by applying quality assurance to individual items as opposed to full tests it’s easier to conduct more focused quality checks — first by pushing individual items through a desired set of review steps, then by doing the same with a test constructed from banked items.

GradeMaker allows teams to adopt an item banking approach, as well as retain a more traditional ‘Whole Paper Authoring’ approach to writing exams.

Automate workflow

Chances are, your test development workflows are managed through a manual process that may look a bit like this:

- A project manager commissions the author

- The author writes a draft in a document

- This is emailed back to the project manager

- They email it to reviewers

- The project manager collates their responses for discussion in evaluation meetings, and tracks each stage in a separate spreadsheet

This process can be difficult to manage, which in turn causes issues around quality assurance. Important checks may be missed or raised too late in the process.

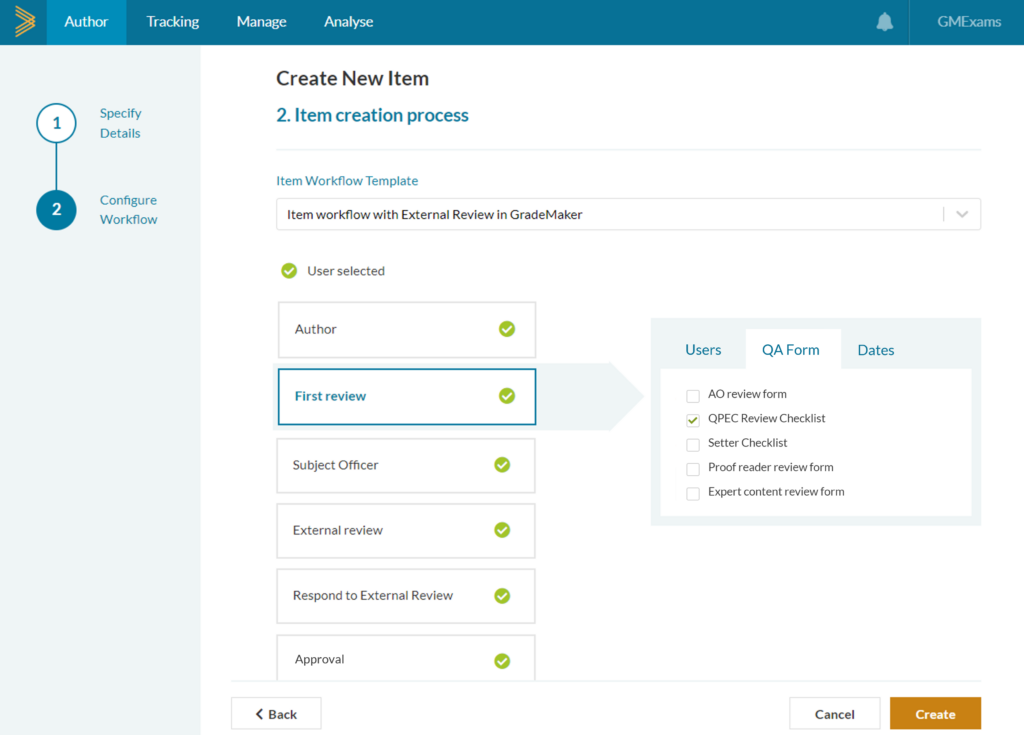

This can be avoided by using a workflow management tool. Good authoring software will allow project managers to create tailored workflow templates and assign users to each step. Each contributor’s task can then be automatically assigned, with the correct tools and QA briefing materials made available to each user based on their role and permissions level.

In GradeMaker when an item or test is ready for review, the assigned reviewer receives a notification. The reviewer can then comment on the item, recommend next steps, and may also be asked to complete a customised QA form before submitting feedback for approval before a specified deadline.

Access test coverage data

A particular QA challenge for test developers is to ensure exams fairly test candidates’ knowledge across the subject domain.

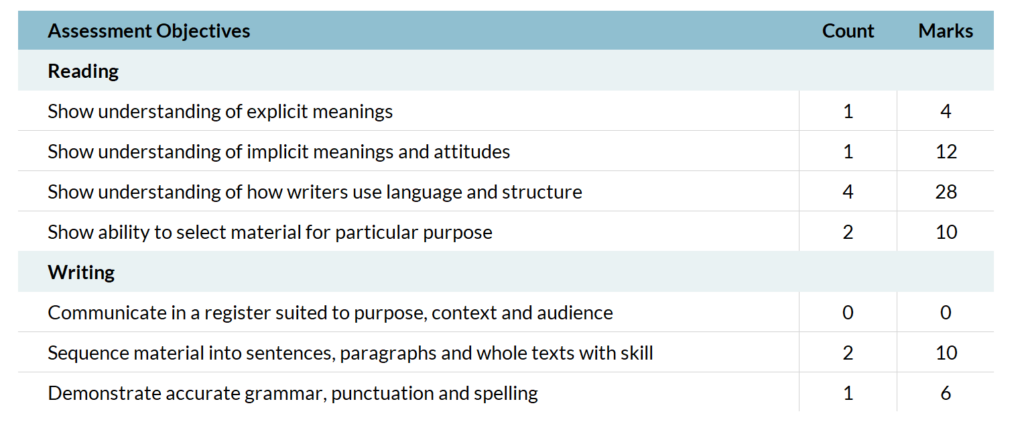

Exam authoring technology makes it easier to check whether this is being achieved through the use of metadata, including how the question relates to the specification such as topic area, skill, assessment objective, or competency statement.

At test construction stage, this metadata can be automatically aggregated, allowing assessment designers to download a wide range of statistics for their draft test.

Being able to access this information on demand makes it easier to evaluate test coverage at each stage of the test’s development. For example, evaluators can quickly check the test covers a broad enough sample of the syllabus, that there is sufficient variety of item types, an even distribution of correct answers in MCQ questions, and an acceptable mean difficulty and differentiation value across all pre-tested questions.

GradeMaker allows for rich metadata to be attached to items, including syllabus mapping and performance data. This allows test developers to see the composition of their test during development.

Track version history

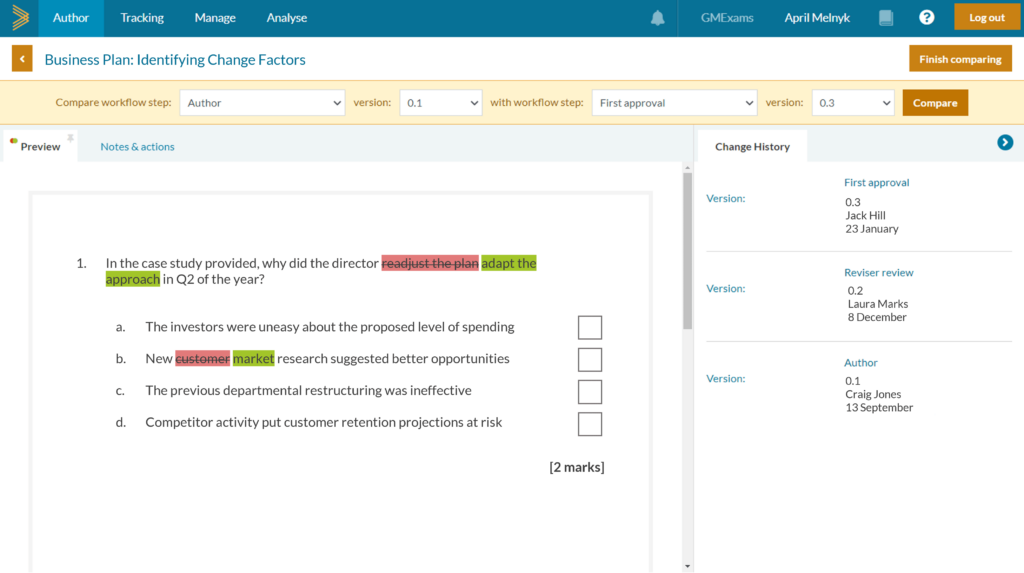

Version control is another common QA challenge. As a draft item or test moves through a series of review cycles, it’s easy to lose track of how and why changes were made.

This can be an issue if a change is made to an item in order to resolve a problem which in turn causes a separate issue identified later on. Or if a mistake finds its way into a final exam and an audit is required to identify the reason for the mistake.

The Track Changes functionality of standard office software offers a partial solution. But it is not designed for the specific requirements of exam writers, in which a deeper interrogation of individual workflow stages is required.

In GradeMaker, test developers can compare one version with another to understand who changed what and when.

Our Customers